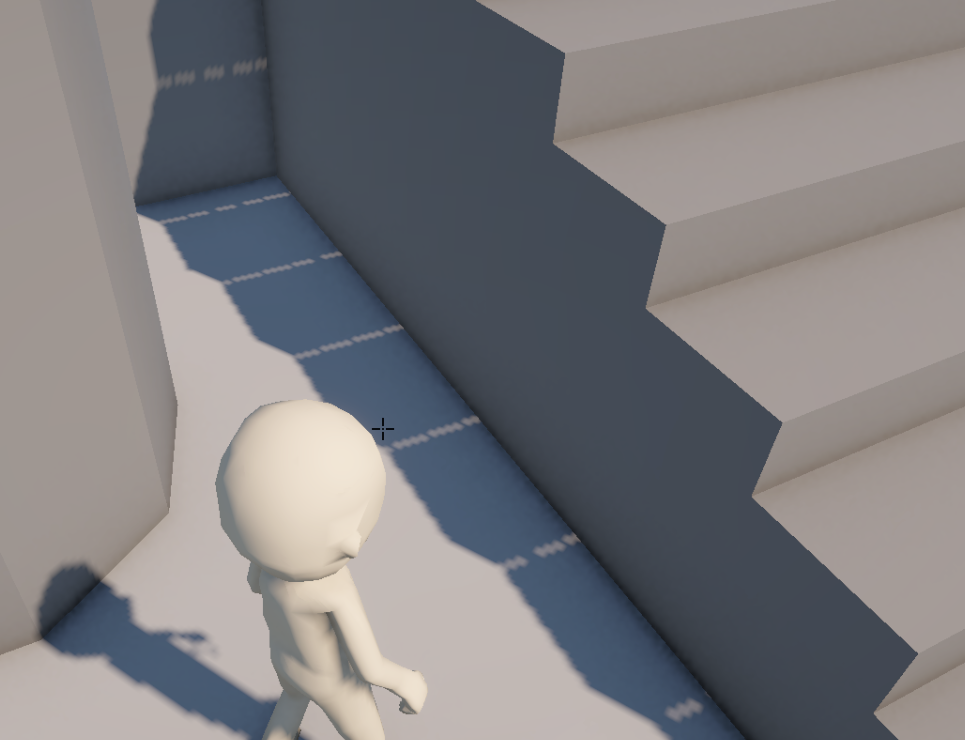

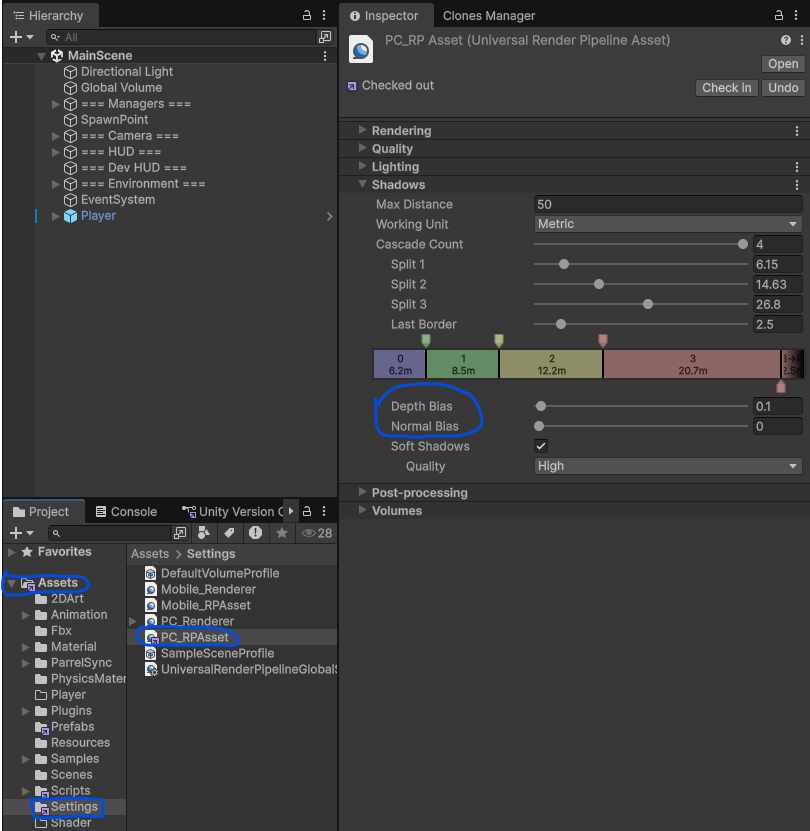

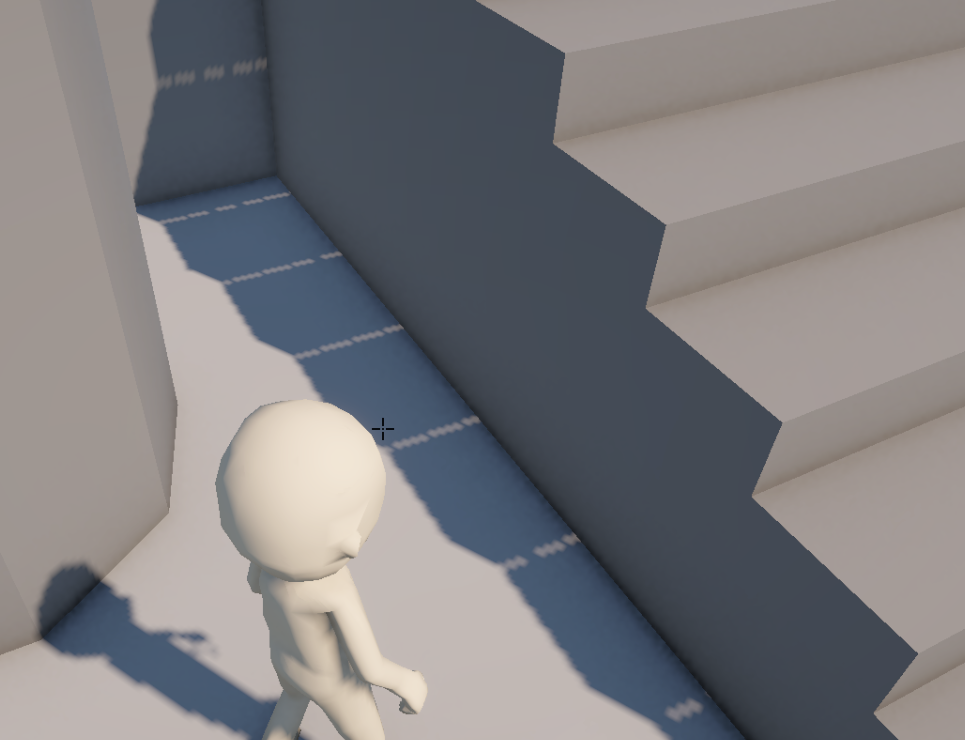

In a default Unity URP project the shadow settings seems to be off.

I have a screnshot of before and after changing settings.

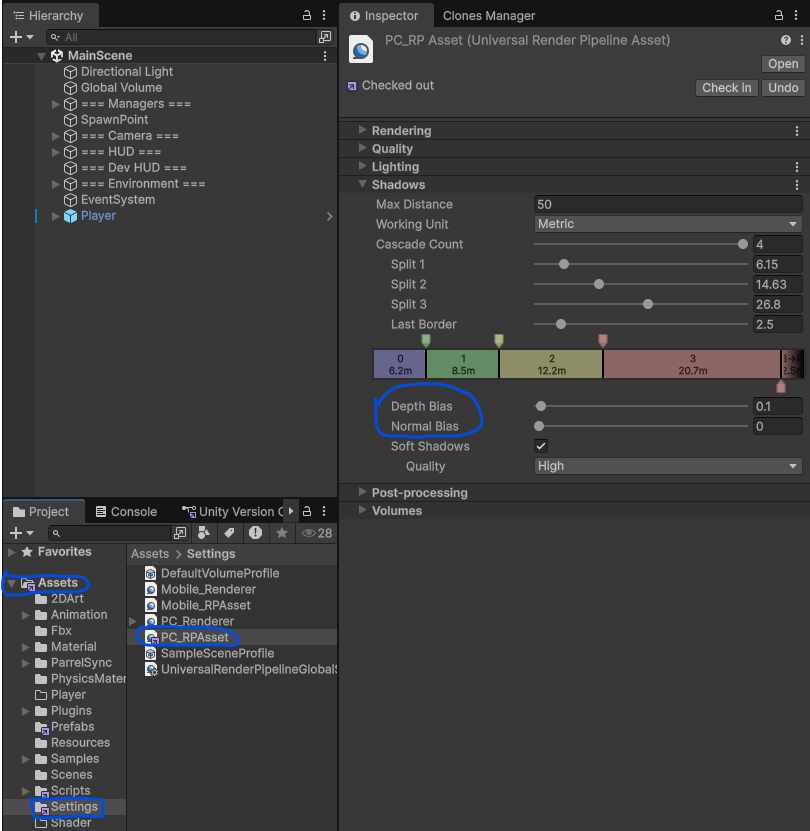

1. Project file browser

2. Assets -> Settings

3. PC_RPAsset

4. Fiddle with Depth Bias / Normal Bias.

Random notes

In a default Unity URP project the shadow settings seems to be off.

I have a screnshot of before and after changing settings.

1. Project file browser

2. Assets -> Settings

3. PC_RPAsset

4. Fiddle with Depth Bias / Normal Bias.

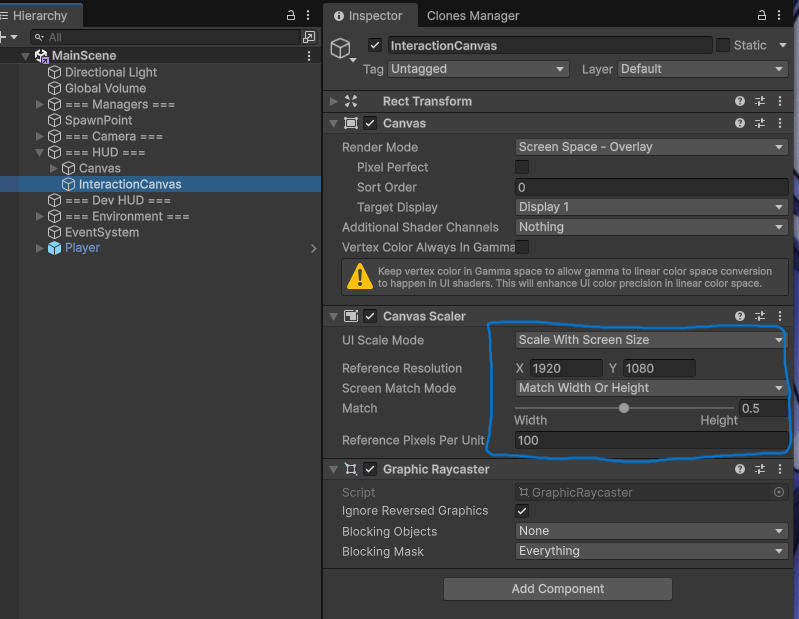

I forget to set scalable Canvas settings.

These settings would fit the need for most cases for 1080p environment.

1. Scale With Screen Size

2. 1920 x 1080 (1080p)

3. Match Width Or Height

4. Threshold to 0.5

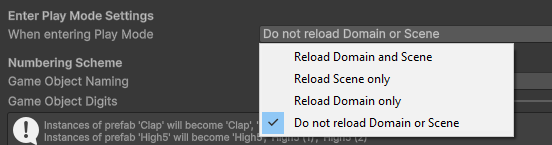

Feeling frustrated when hitting Play to test your game?

1. Edit -> Project Settings

2. Editor on the left side

3. Change the settings for “When entering Play Mode” to Do not reload Domain or Scene.

*Understand when you should be reloading by reading the Unity documentation.